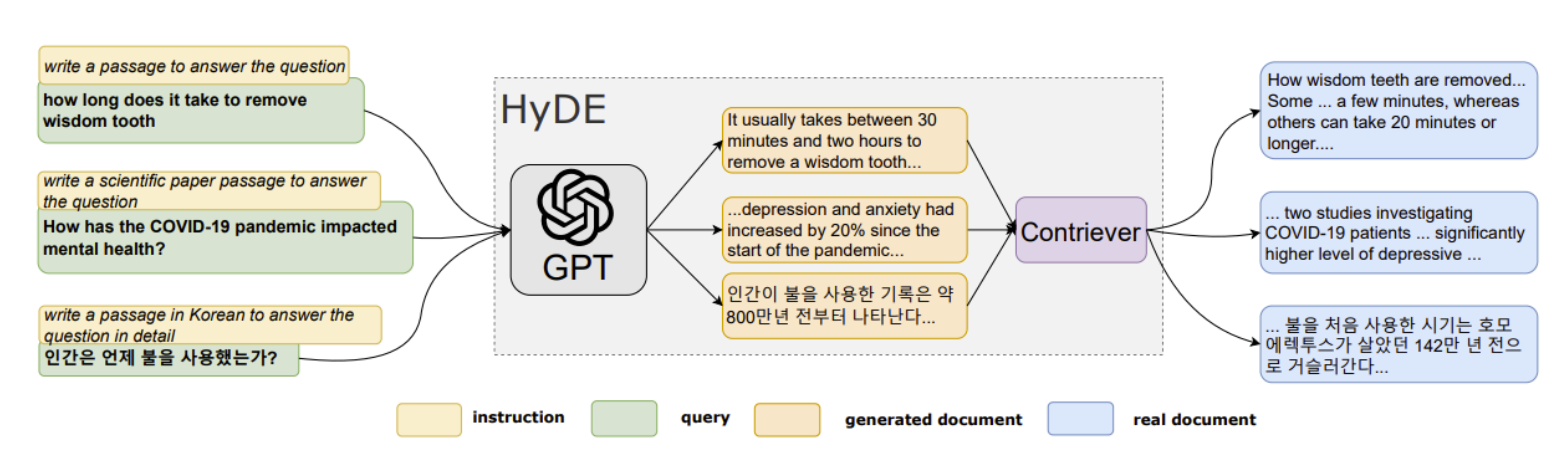

During the third presentation of the LLM reading group, Dr. Shengyao Zhuang from CISRO provided an overview of the use LLM architectures in information retrieval.

The talk began by a review of classic language models including bag-of-words models and neural ranking models. Then Dr. Zhuang introduced how to pre-train and fine-tune BERT-like language models for ranking. Some common challenges and corresponding solutions are covered. Afterward, some recent advancements that combine LLMs such as T5, LLaMA, and GPT-3/4 with ranking are introduced. particularly, Dr. Zhuang showed that LLMs-based retrieval systems have excellent ability to conduct zero-shot ranking. Finally, the talk ends by identifying a few future research directions.

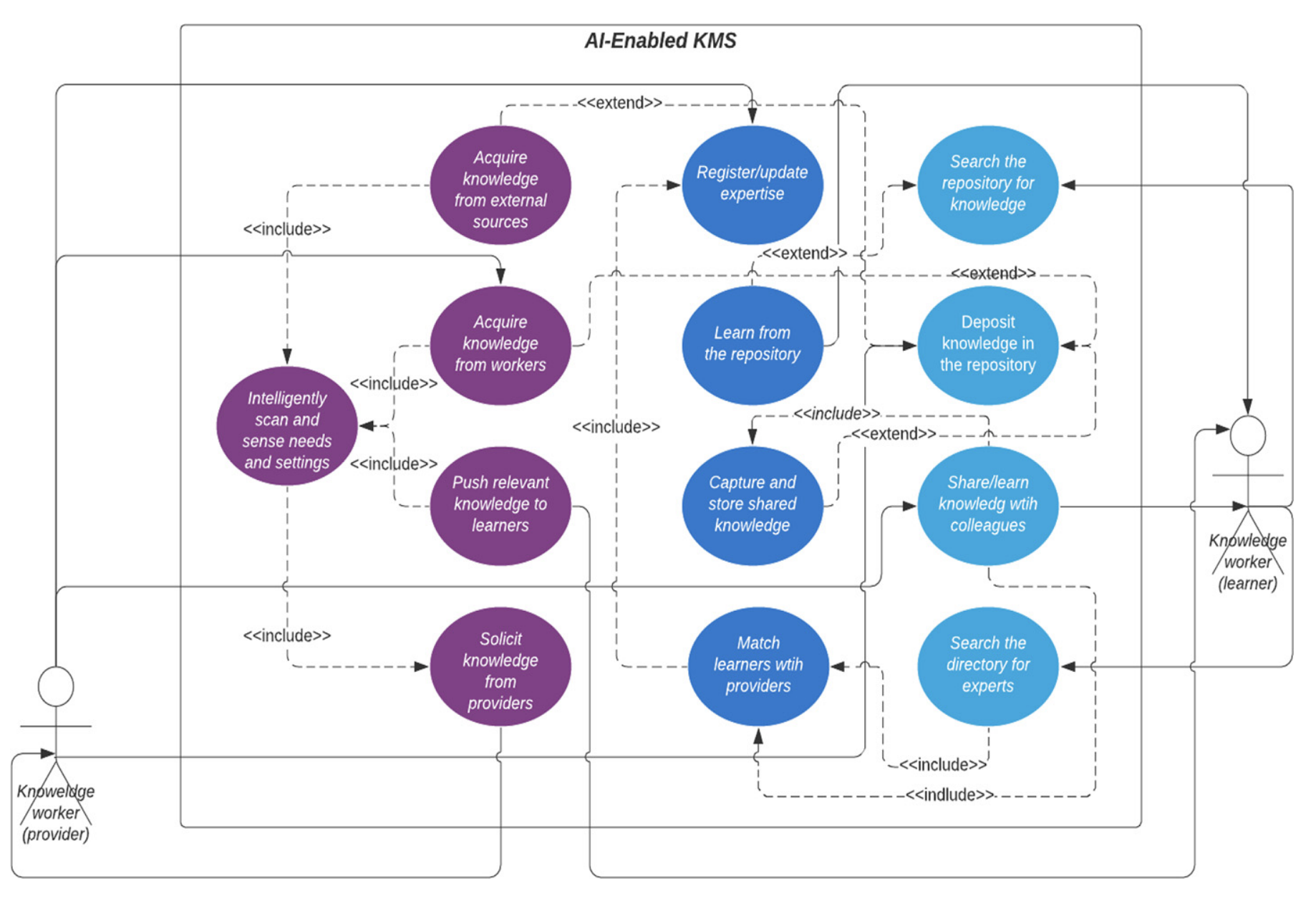

Computational Social Science Reading Group Series: AI-enabled knowledge sharing and learning: redesigning roles and processes

Computational Social Science Reading Group Series: AI-enabled knowledge sharing and learning: redesigning roles and processes