Krishna Dermawan initiated the discussion about responsible AI, which is based on the book: “Responsible Artificial Intelligence How to Develop and Use AI in a Responsible Way”. In the following, the notes from the discussion are shared.

Imbuing “responsible” into technologies is connected to their operationalisability (being able to be put into action) and generalisability (being able to be apply to different contexts, e.g. private-public) (Shazia). But the link between operationalisability and responsibility is complicated. Too much criteria for responsibility can be stifling; too little, and they become vague and unhelpful (Abraham).

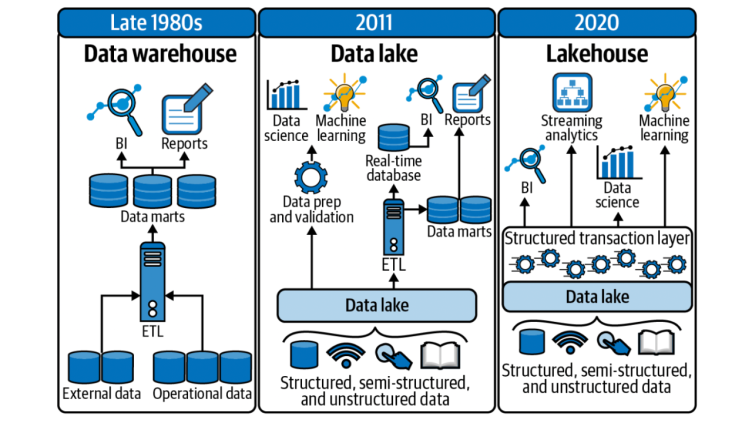

Imbuing responsibility permeates many aspects of technology development. Ethical problems can already exist in the data (how it’s gathered, whether bias exists in it), in the data management, in the algorith design, and so on (Hechuan).

Furthermore, context matters: how responsible you need to be depends on the context’s “sensitivity to ethics” (thank you Elyas, I love this term!).

Furthermore, there is a dilemma between business interests to keep their technologies secret so as to not be copied, but on the other hand the notion that responsibility equals transparency (Elyas). This is a fundamental dilemma for organisations especially in the private sector (Elyas, Shazia). With private organisations being so big and influential, they can become “autocracies”; and they can become so powerful that maybe they cannot be stopped (e.g. OpenAI and ChatGPT) (Daisy). For this, there are external (governance) and internal (assurance) mechanisms that help organisations remain both competitive and responsible (Catherine); and they enforce both preventative and punitive actions (Shazia). But are these mechanisms in place for new technologies, and how to set this up? (This is also a fundamental question, thank you Catherine).

As a practitioner/developer, there is also an issue with logic (or incentive?) here. Developers are interested in practical considerations that gets the technology implemented (Junliang). There are concerns about design, data, and unfair results; but the driving force behind work seems to be on “how do I implement this?”.

Hence, as practitioners, it’s useful to understand that Responsibility exists on multiple layers of institutional logic (e.g. Thornton & Ocasio 1999). There is the ethical layer, the legal layer, the operational layer, etc. Each layer enact different paradigms, assumptions and interests. For instance, the legal layer is constantly focussed on remedies. Within each layer, there are also heterogeneous ideas. Added to this, these logics are different across countries (Abraham).

For Abraham, there are some critical contemporary issues related to responsible technologies today:

- Comprehensibility, but in what way? (Who needs to understand, and in what level of detail?)

- Discrimination and Fairness, but how? (Fairness is not homogeneous, e.g. is it equal opportunity, equal outcome, etc?)

- Has manipulation been embedded in the technology (intentionally or unintentionally)

- Who is liable? Data collectors, modellers, businesses, users?

Below are resources recommended by Abraham:

Welcome to Archive of Information Resilience!

Welcome to Archive of Information Resilience!